Large Language Model on windows server 2019 | support by Hfimg

AWS-Marketplace

https://aws.amazon.com/marketplace/pp/prodview-spkeiuyarjexu

Usage Instructions

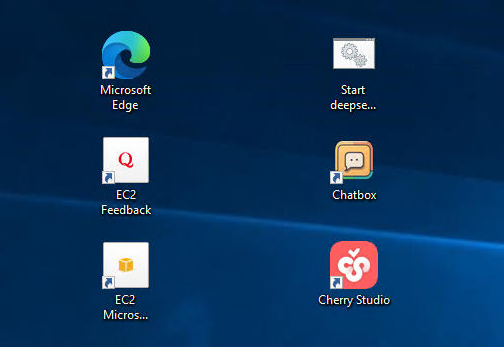

Several ways to interact with the large model

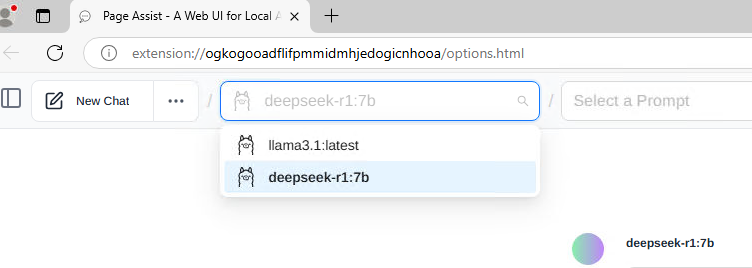

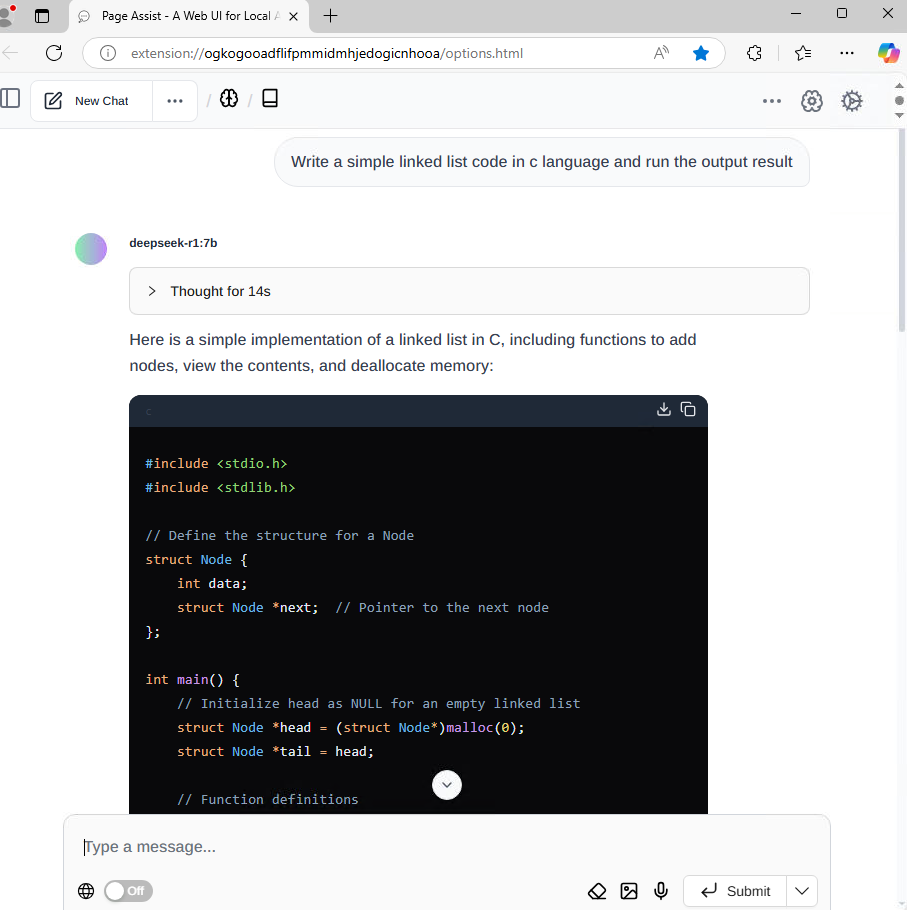

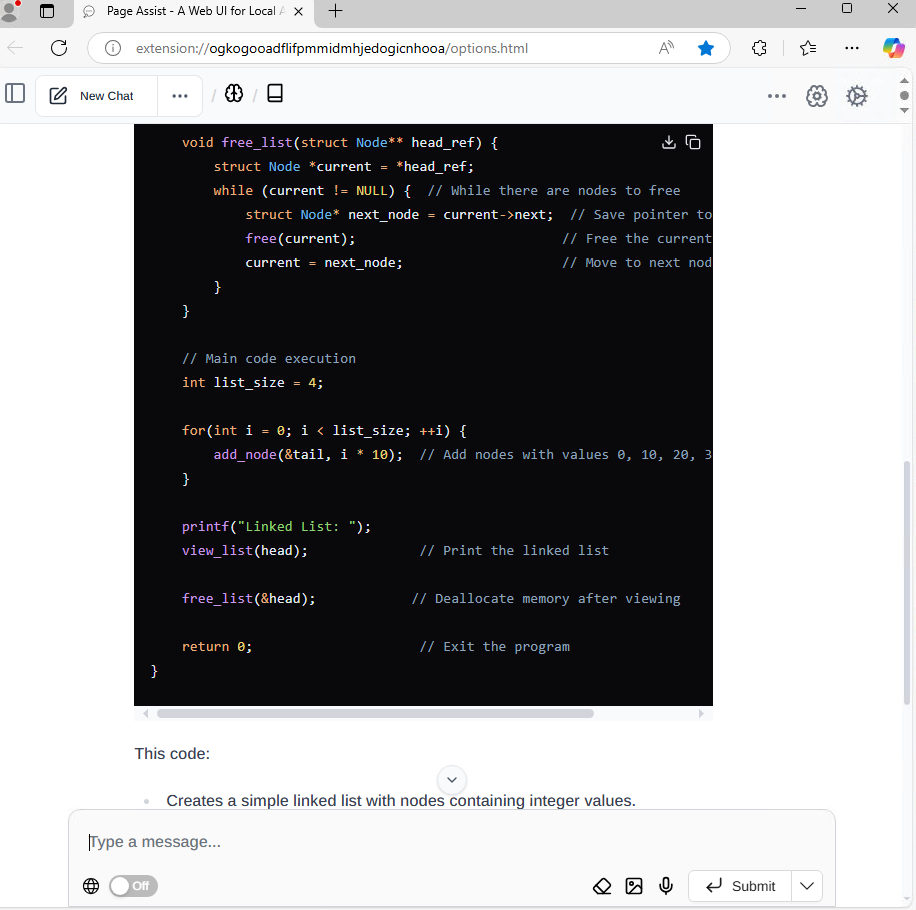

1.AI model plugged into Edge browser

Click the start deepseek.bat batch file and wait for a period of time to

automatically open the command line window and Edge browser.

To switch to another large model, right-click to edit the third line of the batch file as follows

-

start cmd /c "ollama run llama3.1:8b" -

start cmd /c "ollama run deepseek-r1:32b" -

start cmd /c "ollama run qwen3:14b" -

start cmd /c "ollama run mistral" -

start cmd /c"ollama run gemma3:12b"

...

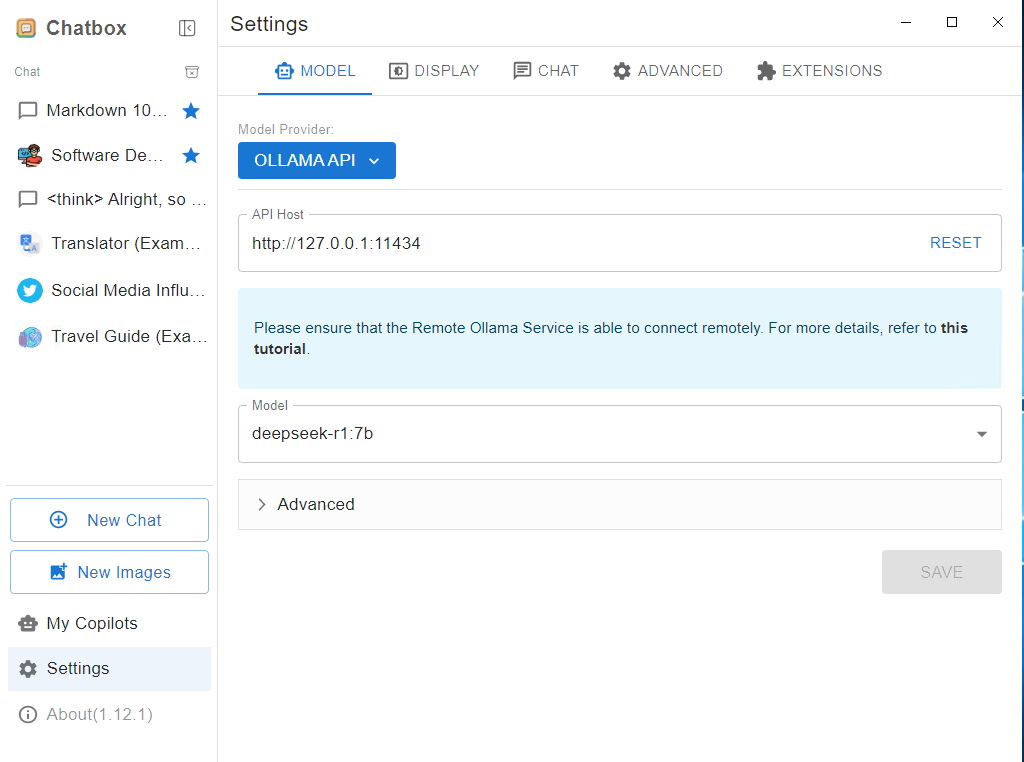

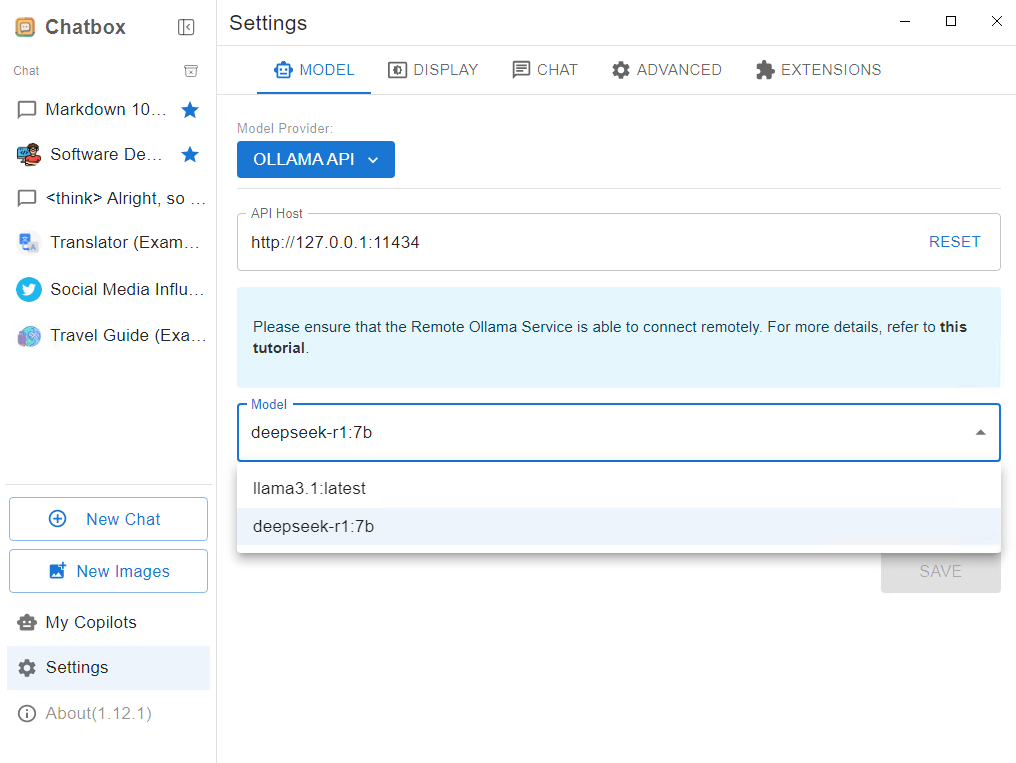

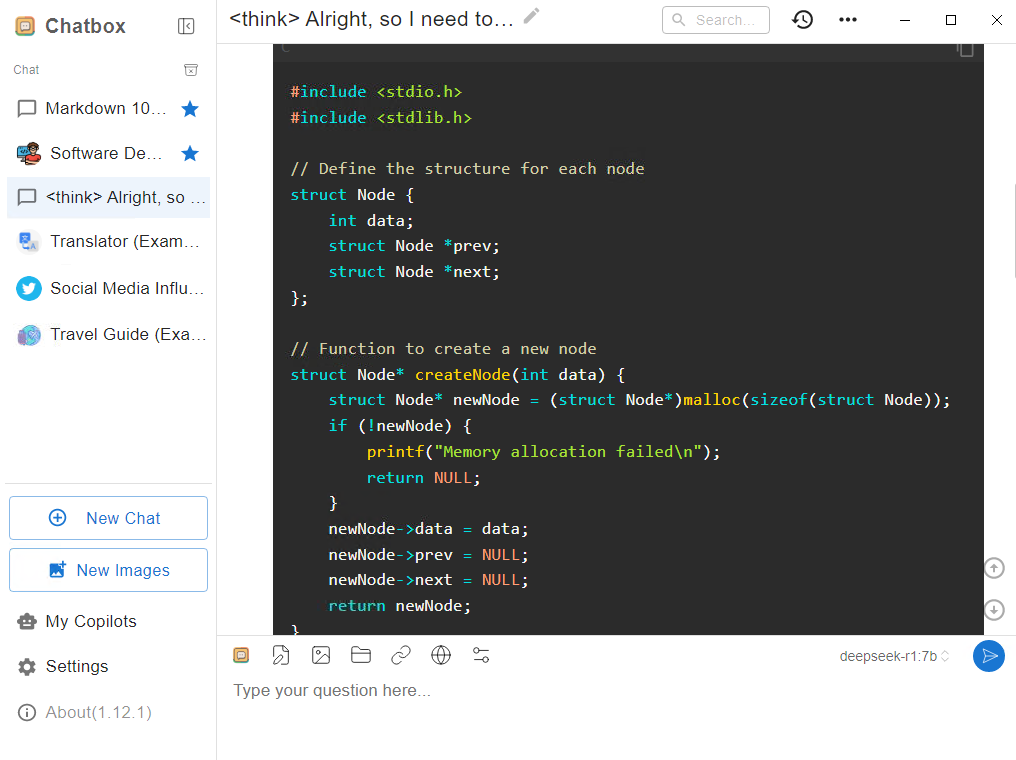

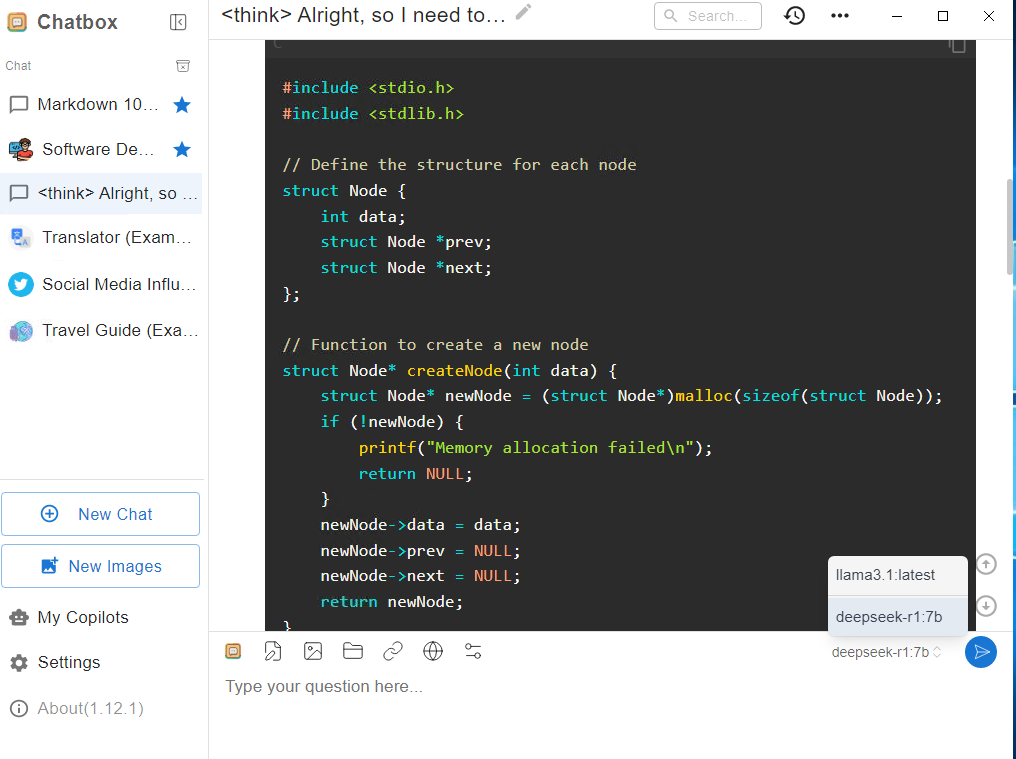

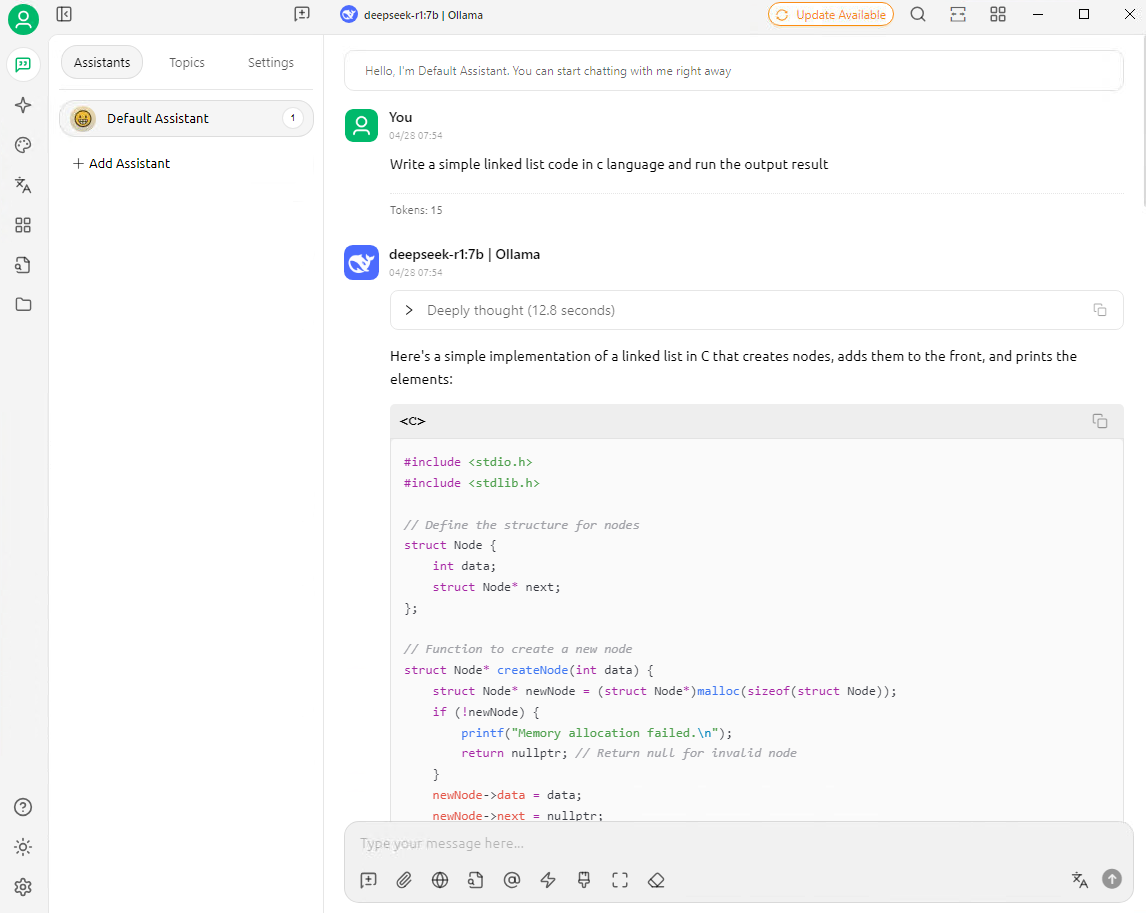

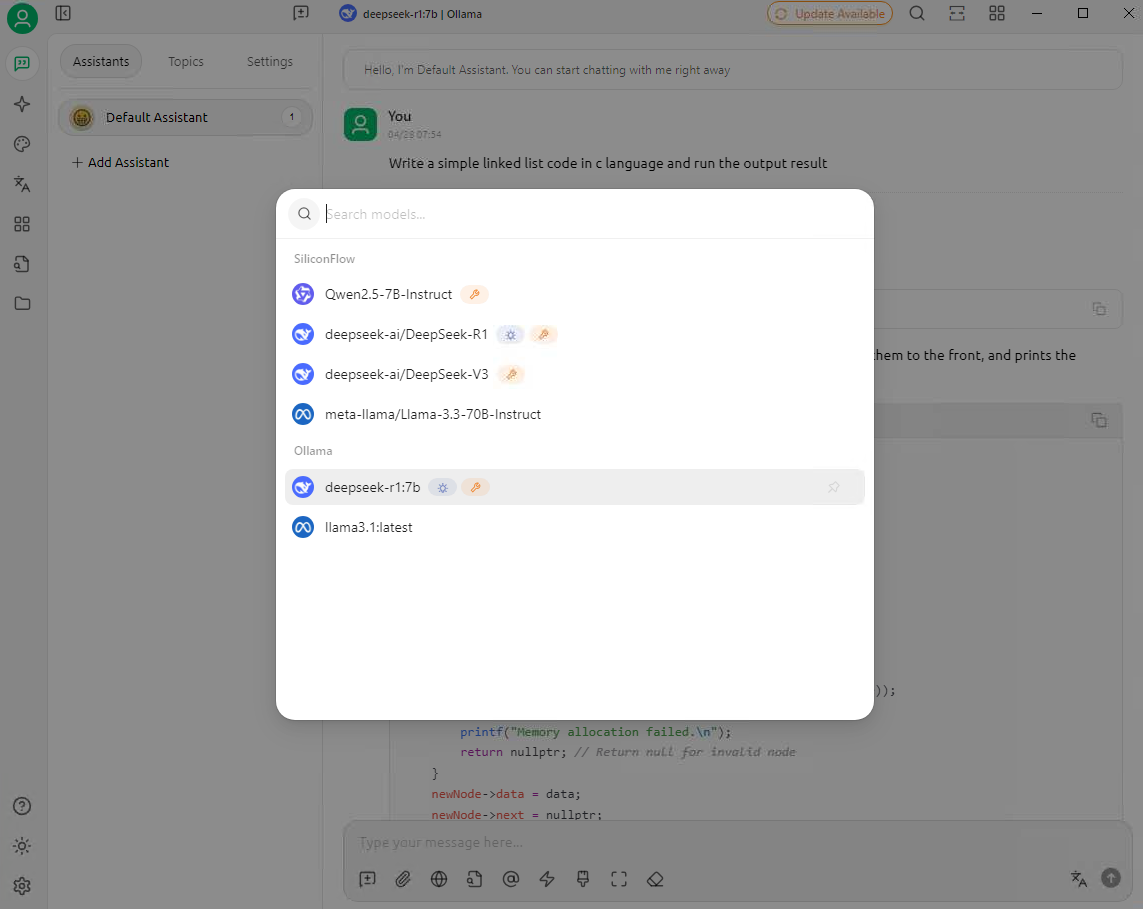

2.chatbox

Basic parameters have been set, if switching large model refer to the following guide.

https://chatboxai.app/en/help-center/connect-chatbox-remote-ollama-service-guide#

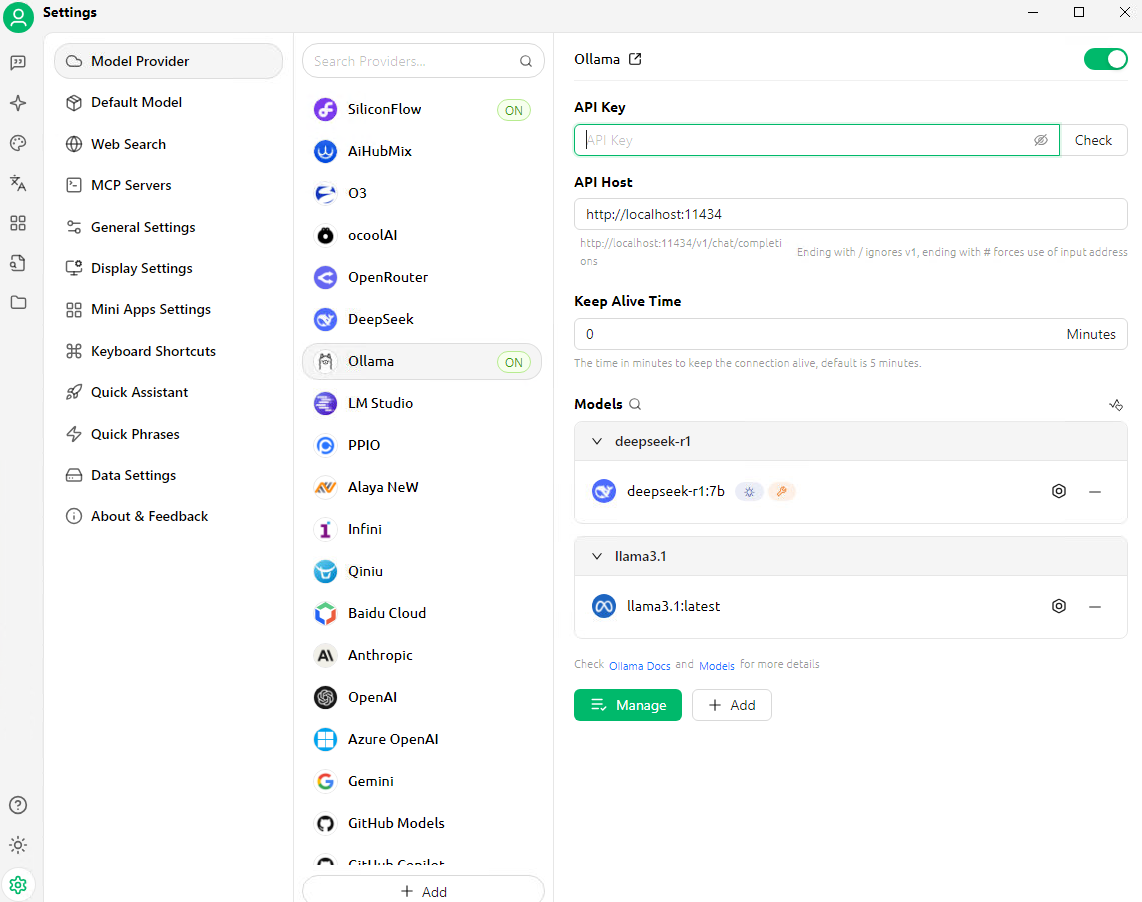

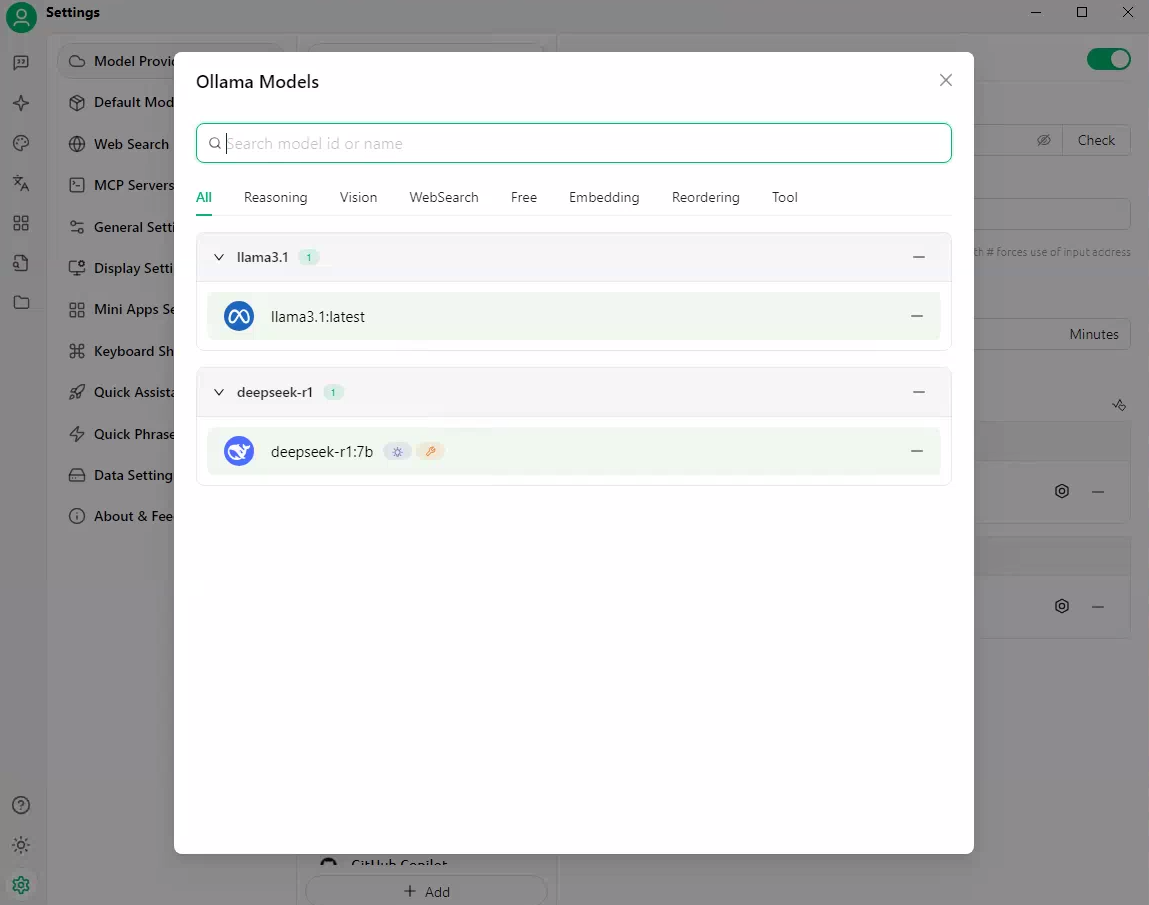

3.Cherry Studio

1.Function Introduction

https://docs.cherry-ai.com/cherry-studio/preview

2.Basic parameters have been set, if switching large model refer to the following guide.

https://docs.cherry-ai.com/pre-basic/providers/ollama

3.Knowledge Base Tutorial

https://docs.cherry-ai.com/knowledge-base/knowledge-base

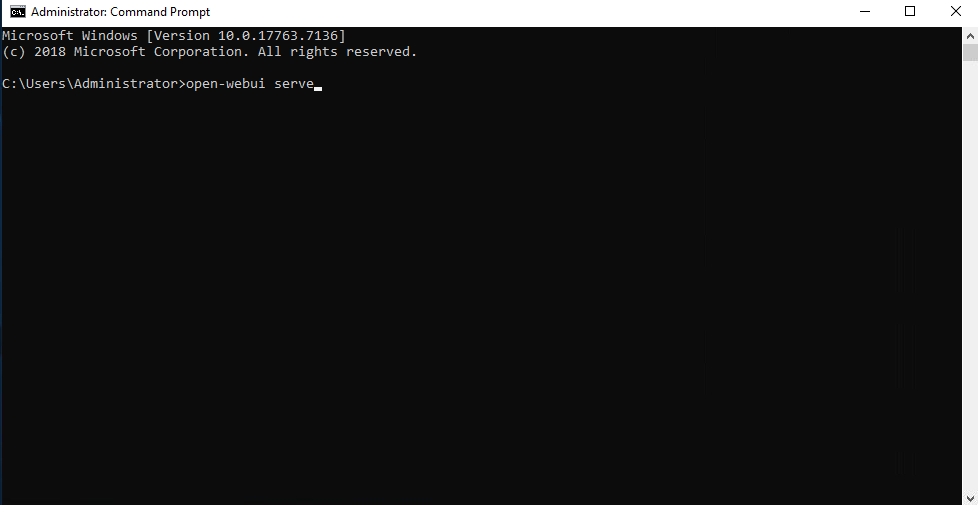

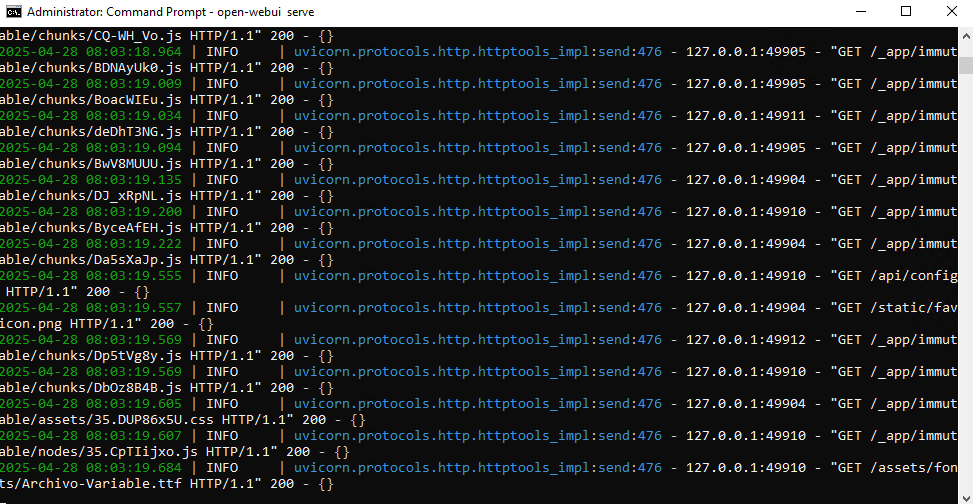

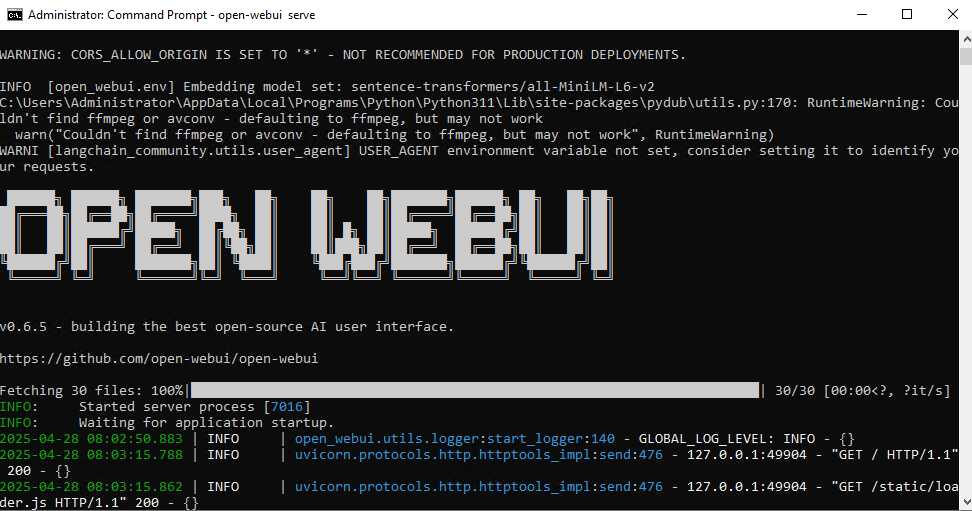

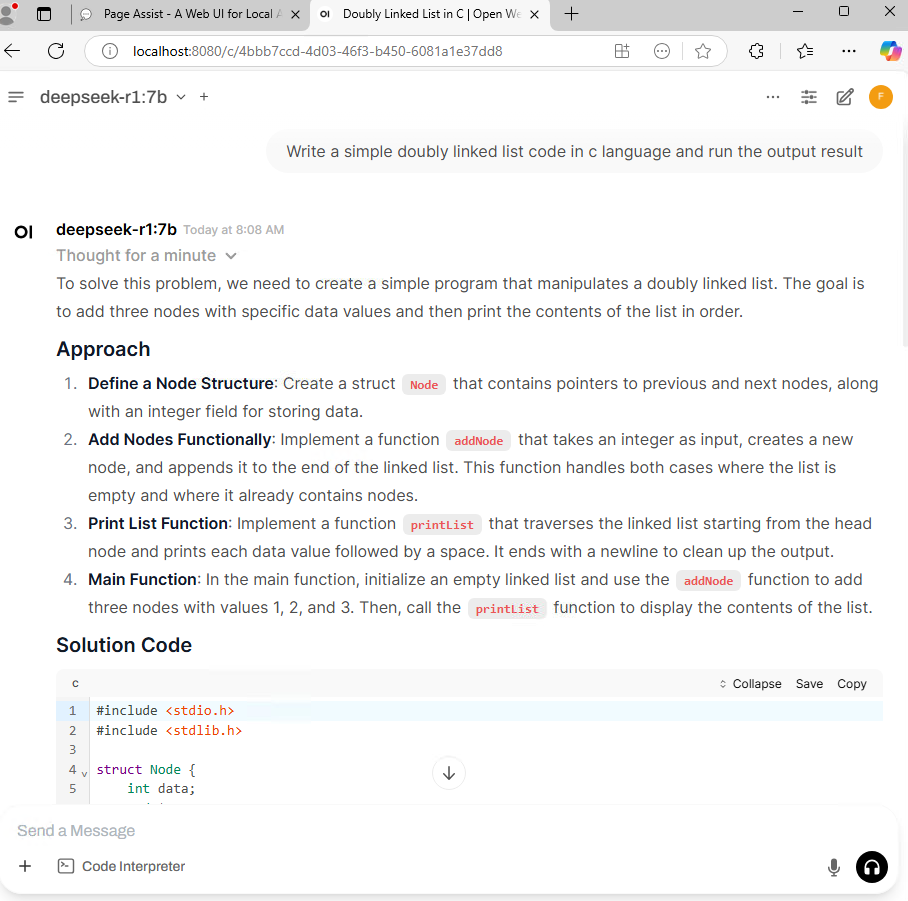

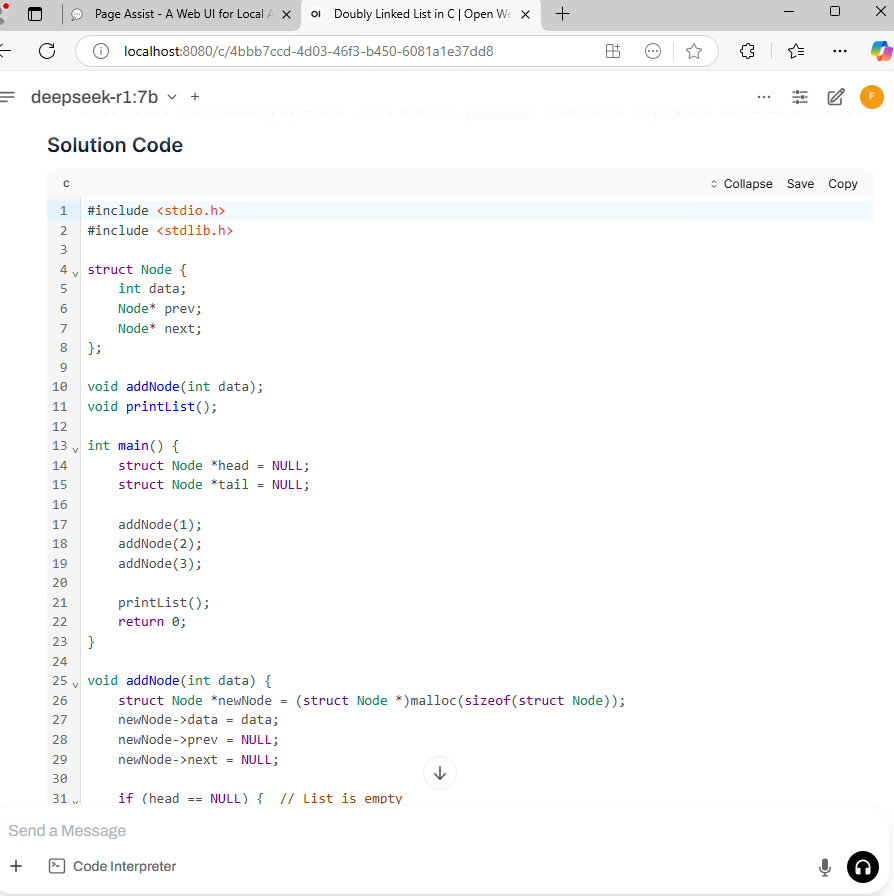

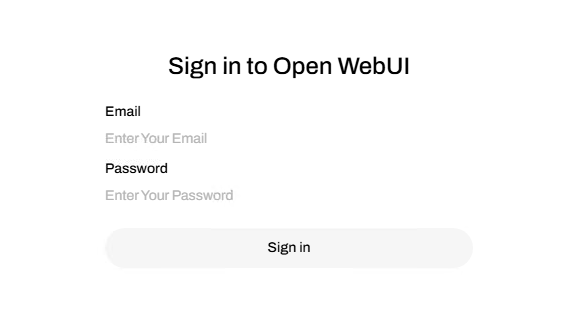

4.Open WebUI

Start Open WebUI

open the command line window, type open-webui serve,in the browser enter the default

access address: http://localhost:8080

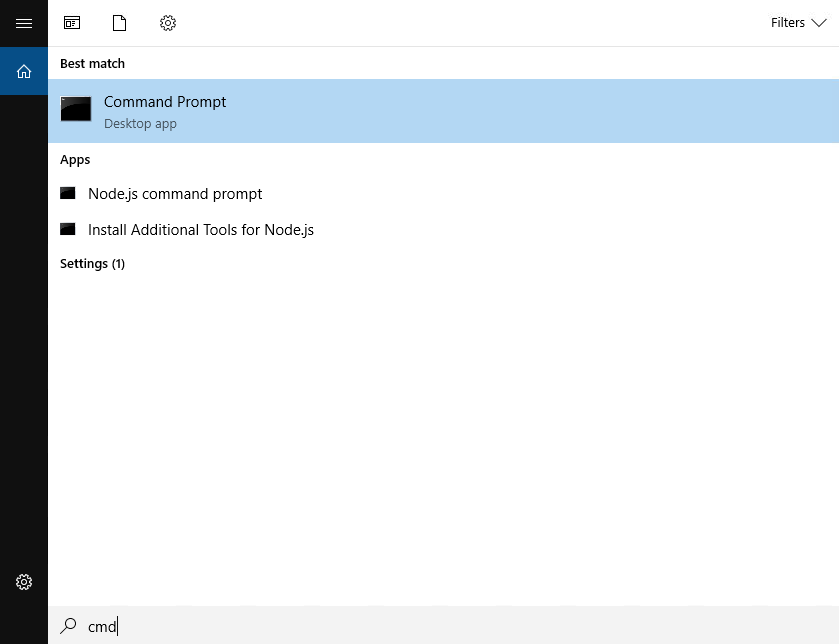

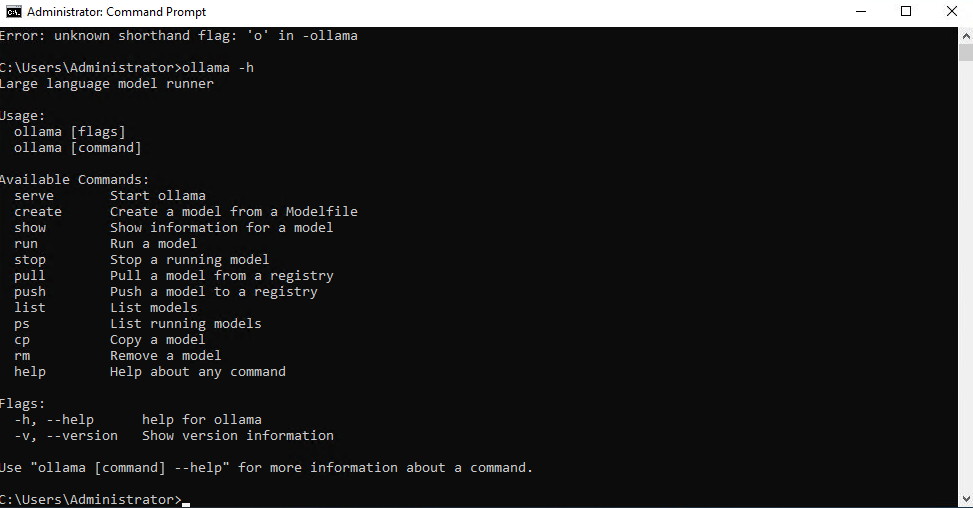

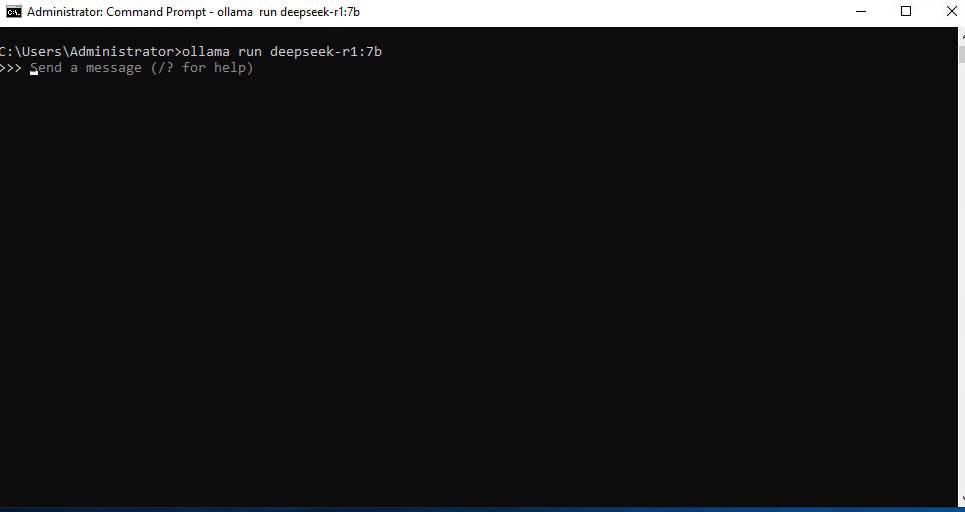

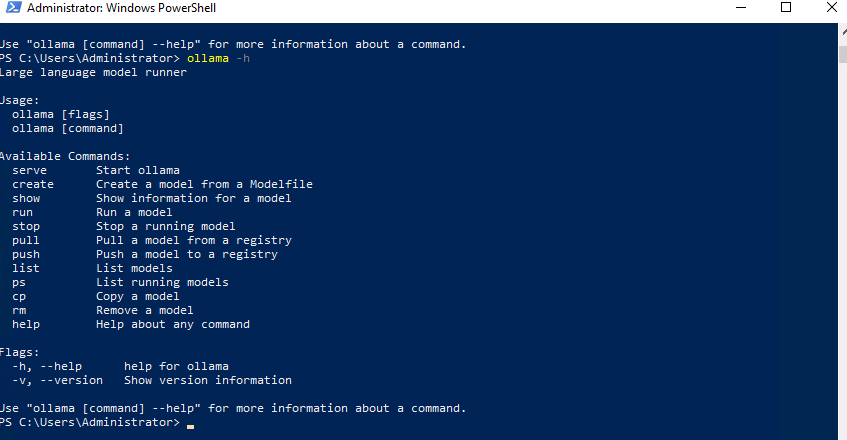

5.Download and large model

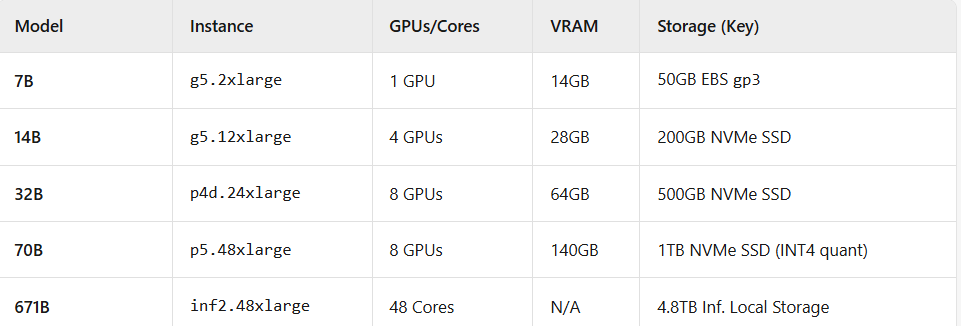

- ollama run deepseek-r1:7b

- ollama run deepseek-r1:14b

- ollama run deepseek-r1:32b

- ollama run deepseek-r1:70b

- ollama run deepseek-r1:671b

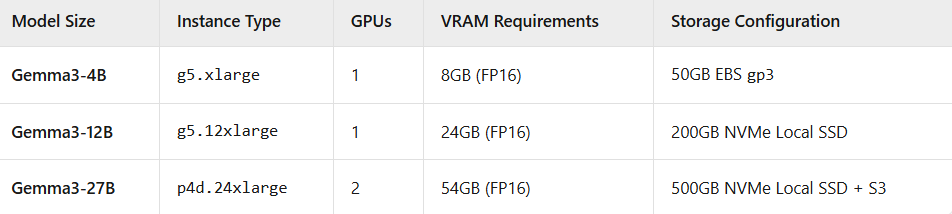

- ollama run gemma3:4b

- ollama run gemma3:12b

- ollama run gemma3:27b

- ollama run llama3.1

- ollama run llama3.1:70b

- ollama run llama3.1:405b

- ollama run llama3.3

- ollama run qwen3

- ollama run qwen3:14b

- ollama run qwen3:30b

- ollama run qwen3:32b

- ollama run qwen3:235b

- ollama run mistral

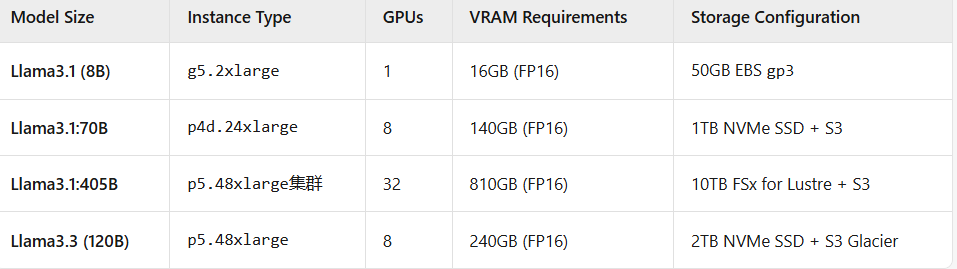

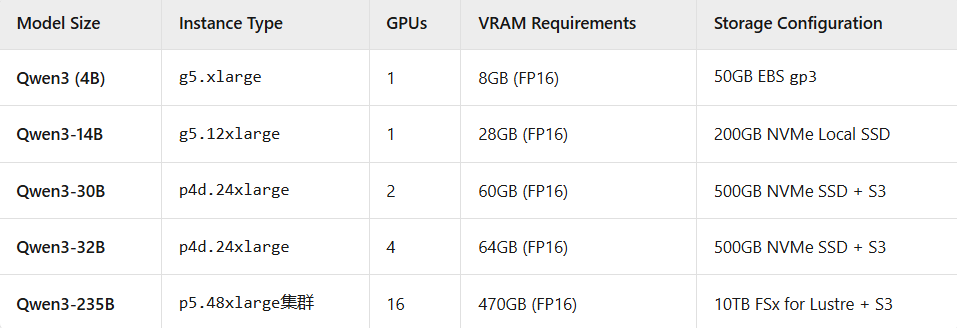

6.AWS EC2 configuration recommendations for different models